Season 1 – Episode 39 – Voice as a Biomarker of Diseases

Discusses voice as a biomarker of diseases, including ethical considerations and how this technology could change the way we diagnose and monitor diseases.

Podcast Chapters

Click to expand/collapse

To easily navigate through our podcast, simply click on the ☰ icon on the player. This will take you straight to the chapter timestamps, allowing you to jump to specific segments and enjoy the parts you’re most interested in.

- Introduction and Guest Background (00:00:03) Host introduces the podcast, guest Yael Bensoussan, and outlines the episode’s focus on voice as a biomarker.

- Yael’s Journey to Voice Research (00:01:04) Yael shares her background in singing, speech pathology, and ENT, leading to her interest in voice health and research.

- Voice as a Biomarker Explained (00:02:46) Discussion of what it means to use voice as a biomarker and how voice reflects health status.

- Types of Diseases Identified by Voice (00:04:00) Overview of disease categories detectable via voice: voice disorders, speech-affecting disorders, and unrelated diseases.

- Data Collection and Quality Control (00:06:12) Explanation of the types of voice data collected, quality assurance, and privacy measures in the Bridge2AI Voice project.

- How AI Analyzes Voice Data (00:08:27) Description of AI methods for extracting features from voice and speech to identify health conditions.

- Ethical Considerations: Privacy and Consent (00:10:31) Discussion of privacy, consent, and the unique risks of sharing voice data compared to other biometrics.

- Addressing Bias in Voice Data (00:13:19) How the project accounts for bias in voice data, including demographic and linguistic diversity.

- The Need for Explainable AI (00:16:03) Importance of explainable AI for trust and adoption in clinical settings, with real-world anecdotes.

- Future of Voice Biomarkers in Healthcare (00:16:57) Predictions for how voice biomarker technology will evolve and integrate into healthcare, including ambient scribe systems.

- Challenges Ahead: Explainable AI and Trust (00:17:32) Key challenges to adoption, especially the need for explainable AI and building trust in the technology.

- Resources for Further Learning (00:19:07) Recommendations for resources, including the Bridge2AI Voice project website, YouTube channel, and annual symposium.

- Key Takeaway: Start Listening (00:20:22) Final advice to clinicians and listeners: pay attention to voice as a health indicator, both with AI and human intuition.

- Closing and Podcast Information (00:21:06) Host thanks Yael, shares information about other resources, and closes the episode.

Episode Transcript

Click to expand/collapse

Daniel Smith: Welcome to On Tech Ethics with CITI Program. Today, I’m going to speak to Yael Bensoussan, who is an assistant professor of otolaryngology at the University of South Florida Health Morsani College of Medicine and a fellowship-trained laryngologist. She is also the principal investigator of the Bridge2AI-Voice Project, a multi-institutional endeavor funded by the National Institutes of Health to fuel voice as a biomarker of diseases. In our conversation, we are going to discuss voice as a biomarker of diseases, including ethical considerations and how this technology could change the way we diagnose and monitor diseases.

Before we get started, I want to quickly note that this podcast is for educational purposes only. It is not designed to provide legal advice or legal guidance. You should consult with your organization’s attorneys if you have questions or concerns about the relevant laws and regulations that may be discussed in this podcast. In addition, the views expressed in this podcast are solely those of our guests. And on that note, welcome to the podcast, Yael.

Yael Bensoussan: Thank you so much for having me.

Daniel Smith: It’s great to have you. So I briefly introduced you and talked a bit about your background, but can you tell us more about yourself and what led you to explore the idea of voice as a biomarker of health through your work at the Morsani College of Medicine?

Yael Bensoussan: Yeah, of course. So I’m Canadian, and I actually did a lot of singing. I was a singer-songwriter in my past life. And then I studied speech pathology, which specifically helps people rehabilitate their voice. So I’ve always been really passionate about voice. And then I got into med school. I became an ENT surgeon knowing that I always wanted to treat people’s voices for a living, because I knew that people that lost their voice felt really bad. They lost their identity, they couldn’t communicate. So that was always a dream of mine. So I opened the voice center here with Dr. Stephanie Watts about five years ago, where we treat people with voice conditions. And then from a research perspective, we got really interested in, well, we can tell when people are sick because we listen to voices all the time, but can AI listen to it as well and make the same determination?

We’re not the only ones who are interested in this. Obviously, we came at the best moment because there was kind of a lot of people interested at the same time. This is when we decided to apply to this NIH funding opportunity called the Bridge2AI Program that had really traditional biomarkers like genomic biomarkers, radiology. And then we came with this theme of the voice. I think at first they were a little scared to fund us because they thought it was a little bit out of the box, and government money needs to fund things that are more secure, but they’re very happy because now they realize the potential of it.

Daniel Smith: That’s wonderful. I think you’ve alluded to this a bit, but just so our listeners fully understand and are on the same page, can you just briefly tell us what does it mean to use voice as a biomarker of health or disease?

Yael Bensoussan: Yeah, great question. So basically, when we talk, when we speak, there’s a lot of information in our speech or in our voice that could tell how healthy we are. So for example, if you’re tired, usually the voice sounds a little bit lower, slower pace. If you’re sick, when you have laryngitis, for example, that’s inflammation around your vocal cords, that’s going to cause the sound of your voice to be different, more rough. And then people will ask, “Well, are you sick? You sound like you have laryngitis.” Right? So it’s really these types of cues that we’re trying to listen to in the voice. So there’s a way to do it more humanly and extensively, and say, “Hey, you sound like your voice is rough. You probably have laryngitis.” But then we want to train artificial intelligence models to be able to do that at scale, because there’s only a few of us that could listen to voices. How about if now we have the tools to make anybody able to do what we hear?

Daniel Smith: So I recently attended a talk where they shared about some of the diseases and disorders that you can identify using voice biomarkers. It was very interesting, things that people may not think could be possible through this. So can you talk a bit more about the type of work that you’re currently doing and the types of diseases and disorders that you’re able to identify using voice biomarkers?

Yael Bensoussan: Yeah, absolutely. So I always categorize it as three different categories. There’s the one, first of all, that we call the voice disorders, where it’s specific disease that affect your voice box. Obviously, it’s going to change your voice. So examples like voice box cancer, laryngeal cancer, laryngitis, something called vocal cord paralysis where it’s really affecting your voice box. So the source of the sound will be different. Okay? Then you have another category of disease that’s called voice and speech affecting disorder. So it’s not that your voice box is affected. It’s really something else that’s affecting the way you speak. So for example, neurological disorders like Alzheimer’s, right? It’s not affecting the voice box per se, but it will have an impact of the words you say and then how the speech will sound. Parkinson’s, that’s another one. Things like pneumonia, right? It’s going to change the way you breathe in your speech, so it’s going to affect how you sound. So that’s the second category.

The third category, which is a lot less intuitive, is diseases that have nothing to do with speech or voice. And somehow the technology is good enough to find a difference. So an example is like diabetes. There’s a few groups out there who are building models that can make a difference between people’s voices who have diabetes and those who don’t, or hypertension. So when you think about it, it’s pretty crazy, because me as a clinician, although I listen to voices all day, every day, I cannot tell if somebody’s voice has diabetes. So I’m actually a little skeptical about the technology because I want to be able to believe it, but those are things that are being investigated.

To give you a fun fact, I had somebody who was playing with our data set from MIT, a student, and he said, “Well, I was able to get to understand if people were married or not from your data.” I said, “Are you really?” So we also have to a little bit sometimes asked question about the technology, but these are the kinds of things that we’re investigating right now.

Daniel Smith: That’s really fascinating. So I do want to shift gears a bit into how the technology works. As a kind of foundational question, what kinds of data or recordings are typically used, and how do you ensure that they’re sufficient quality and consistency?

Yael Bensoussan: Great question. So obviously, there’s a lot of people out there who collect data. We really pride ourselves at Bridge2AI to provide accurate and high-quality data because we’re building a resource for other people to use. So first of all, all of the patients that are enrolled, all the data is taken for now in our clinics, in conditions where we control for not too much sound, not too much noise. Then we go over 22 tasks, 22 bioacoustic tasks. So we record breathing, we record coughing, we record forced inhaled, all kinds of breathing sounds. Then we record voice tasks like eh, ah, glides, eh, ah, all kinds of voice tasks that does not involve speech. And then we also record a lot of speech tasks. So reading passages, free speech where we ask open-ended questions. And then we also integrate a lot of things that are done in a neuroscience world where we ask people to describe pictures to look at their cognitive function.

So we have lots and lots and lots of tasks that are recorded in standard conditions that were tested by for a year. We did testing for a year before starting to collect data actually. And then we have a lot of QI processes with our collaborators at MIT where they check what’s the quality of the recording. Is it too loud, not loud enough? Can we hear other speakers? Because there’s always mistakes that come through it. So before disseminating the data, we also have a very rigorous QI process. And for the ethics, the QI process also involves removing any information that is identifying to the patient, because sometimes, believe it or not, that’s a fun fact. When you ask people to tell you about their day, they’re like, “Well, today I came to see Dr. Bensoussan on November 20th. And I live at 266.” People can be quite incriminating when you ask them open-ended questions, so our process also makes sure that we don’t disclose any information to the public.

Daniel Smith: That’s great. And that gives us really good sense of the data, how it’s collected, how you process it and so on. Now, can you walk us a bit through how AI is used to analyze this data and offer the health insights?

Yael Bensoussan: Yeah, of course. So again, we’re not the only one doing this. So I think it’s important to say that the technology out there exists. There’s multiple companies and research labs doing this work, but I can give you kind of the basis of how things work. There are different types of features or biomarkers, right? So when we talk about, there’s some voice features that can be analyzed. So for example, the frequency at which we talk, right? To differentiate between a male and a female, usually the frequency of a female is around 200 hertz, male, 100 hertz. So that’s one feature. Then we can talk about features of how the voice shakes or how the voice is stable. So those are features that we can extract.

And then when we think about speech features, we can talk about like the rate of speech, right? The rate of speech, for example, is a really good biomarkers for diseases like Parkinson’s. When people have Parkinson’s, they speak a lot slower, and actually, the frequency at which they speak is a lot more monotonous. So if you extracted frequency range from a clip, you take an audio clip, you look at the frequency range, how high and low they can go, compared to somebody that has no Parkinson, then the range will be a lot smaller, because they’re very monotonous and the rate of speech will be a lot slower. So this is how the models work, is that we extract lots and lots and lots of features from these audio clips. And then we build models and we say, “Well, these are all the features we extracted. Can you see the difference?” And then based on different features, they can make a diagnosis.

Alexa McClellan: I hope you’re enjoying this episode of On Tech Ethics. If you’re interested in hearing conversations about the research industry, join me, Alexa McClellan, for CITI’s other podcast called On Research with CITI Program. You can subscribe wherever you listen to podcasts. Now, back to the episode.

Daniel Smith: Shifting then into ethical considerations. I know you talked a bit about the de-identification of data and so on, but can you talk some more about the key ethical considerations in collecting and analyzing patients’ voice data, particularly around privacy, consent, and potential bias?

Yael Bensoussan: Yes, of course. First of all, I would have to say that I am not an expert in ethics. We have a fantastic team, Dr. Vardit Ravitsky and Professor Jean-Christophe Bélisle-Pipon, that have been leading our ethics group for three years, that have been really, really phenomenal. And voice is very different than anything else, right? Voice can be unique to an individual. Some people will sometimes say that voice is like a print, right? It’s like a fingerprint. But it’s not really because you can’t change your fingerprint, but you can be sick and your voice will be different, right? So that’s really important to think about. At some point, people said, “Well, the voice is like a fingerprint. We should never share it because we should never share fingerprints.” But we know that voice can change. We know that your voice and your brother’s voice could be very similar, right? We know that you can get sick, or you can get older, and your voice changes. So it’s not as particular as a fingerprint. You could also change your voice if you want to. You can mimic somebody else’s voice.

So that’s where all the ethical considerations come in. We are considering that voice is a risk. When we share voice, there is a risk that people can try to re-identify you or try to harm you, but it’s not the same as sharing fingerprints because voices will change depending on how you feel that day, if you’re sick or not, how old you are. So that’s really important, but it’s really important to protect the patient privacy because people really care about their voice. Jean-Christophe Bélisle-Pipon did a lot of stakeholder surveys with patients, and people care about sharing their voice because they’re scared, right? They hear a lot about voice cloning, they hear a lot about scamming, and they want to be very careful. So we take this extremely seriously with our team, our lawyers, our team of bioethicists, and we’ve put a lot of safeguards in place.

The most important one is, when we disseminate that data, the goal is for other people to use our dataset, but we have a safer dataset, if I can call it like that, where people have access to what we call the data transform. So we transform the acoustic data, the raw voice into visual spectrograms, features that cannot re-identify somebody. This is a dataset that we share a lot more freely where people can access by just registering, not having to… There’s a lot less safeguards with that because we know that there’s no risk of re-identification, versus for researchers that want to access the raw data, then they really have to go through a lot of licenses and attestations, contracts with our university, to be liable if anything happens to the data.

Daniel Smith: In terms of potential bias, how do you think about that?

Yael Bensoussan: So there’s obviously a lot of bias, right? Your voice can be different because of the accent, because of your degree of literacy, because of a lot of things. The first important thing to do to reduce bias is collect a lot of varied and heterogeneous data, but the important thing is also to tag and to account for it, right? Because if you don’t know, then you won’t be able to tell your model. So for example, we ask so many questions about social demographics and confounders. We ask about how many languages people talk, we look at if they have an accent, we look at their literacy level, their socioeconomical status, so that when we build the technology, we take that into account, and we can try to explain the differences. So I think that’s really important.

Can we really make sure that there’s no bias? It’s impossible. In medicine, there’s bias all the time. I think accounting for it and trying to understand why the technology’s giving us an answer is important. I can give you an example that’s a lot less theoretical. We were working on a biomarker that’s called speech rate, so how fast you read a certain passage. And we knew that people with vocal cord paralysis took a lot more time to read a passage than healthy people. So a healthy person took about 10 seconds, somebody with vocal cord paralysis took about 30 seconds. And then we ran a model, and we saw that one of the patients was wrongfully attributed to the vocal cord paralysis group. When we looked at why, we listened to the voice, it was somebody clearly that spoke a different language and that was having a really hard time reading the text in English.

So when you explain it like this, I think people will understand a lot better. And then the part, how do we fix this? We fix this with methods of what we call explainable AI, and that has to do a lot with ethics and trust. How do we trust these technologies? We have to be able to explain our results, and that’s what our group is working on right now. We run a model, it’s great, but how do I know that the model is accurate? Well, let’s listen to the voice. Oh no, that person actually, he was an Indian speaker. That’s why it makes a difference. So these are the more complex scientific questions I think that are kind of beyond the hype. There’s a lot of hype. We can diagnose every disease with a lot of accuracy, but then when you test it on real life, what happens? And that’s really our goal with the Bridge2AI Project: to bring in real-world data for people to be able to analyze real people.

Daniel Smith: I’m sure that you could talk much more about this, so I want to ask about some additional resources. But before getting to that, I want to hear some more about where you see this technology and this field headed. So how do you think that this technology will evolve and might change the way that we diagnose or monitor diseases of health in the future?

Yael Bensoussan: Great question. So I have lots of hopes, and I think I take a lot of responsibility also to be an advocate for this technology because of our role at Bridge2AI. So I think right now with the ambient scribes, things are changing a lot. Ambient scribe technology has started about five years ago. Now, in many hospitals, where people go to their doctors, there’s an ambient listening. And at the end of your conversation, the summary of your visit is written so that your doctor doesn’t have to write a note pretty much. This technology was developed to help alleviate doctors from the administrative burden of writing notes. So that’s great.

But now, if you pair this technology with vocal biomarkers, at the end of your talk with your doctor, your doctor would get the summary, but also say, “Well, oh, your patient sounds like they’re really not doing well. They sound tired. They sound depressed. This is what you should do.” So I think this is probably where it’s going. There’s a lot of work still needed to get there. There are a lot of companies that are already offering products that are linked to ambient scribes to screen for diseases like cognitive decline or depression and anxiety. So the technology is starting to be sold. Is it perfect? I don’t know. But it’s definitely being sold. So that’s where it’s going to go. But also, there’s a lot of advocacy left to do where we have to convince people that this is a really important biomarker. And I think that’s part of our job at Bridge2AI, because some people are still skeptical, right?

Daniel Smith: Yeah, absolutely. So going off of that a bit, what do you see as the biggest challenges that need to be addressed to get there?

Yael Bensoussan: I think first is going to be explainable AI. I think explainable AI is so important. I’ll give you another anecdote to try to illustrate it. I was speaking with Sanjay Gupta from CNN. We have this beautiful interview online, and he was talking to me about a technology. He said, “Well, I was using this startup’s technology on my phone that tells me how anxious I am and how stressed I am. I was on the phone, and the technology was telling me that I was really stressed, but I didn’t feel stressed. And then I asked my wife if she thought I was stressed, and she said, ‘Well, you kind of have your serious voice on.’ So I realized that maybe the technology was even better than me knowing if I was stressed.” So he was super impressed, but I’m like, “Or the technology’s wrong, and it just failed.” Right?

But without explainable AI, without a way to technology to say, “Well, I think that you are stressed because, because the rate of your speech was slower today, because the tone of your voice is different.” Without that piece, nobody’s going to trust that technology. Same thing in radiology. In radiology, if you have an AI model that says, “I think that this is a lung cancer,” it has to show you because I found a abnormal mass at image 52. That’s important. So we need to build the same thing for voice, and that’s where the science is lacking right now, and that’s what we’re trying to work on.

Daniel Smith: So then I think that brings us back nicely to the additional resources where folks can learn more about explainable AI or voice as a biomarker, any of the issues that we’ve talked about today. Do you have some resources that you can share with us all?

Yael Bensoussan: Yes, of course. So if you go to our website, Bridge2AIVoice, B2AI-voice.org, you’ll find a lot of material on voice biomarkers, a curriculum with training. We also have a YouTube channel with a lot of presentations that were recorded that you can look into. The explainable AI field, we are working on, so I’m hoping that within six to 12 months, I’ll have a lot to share. We also have our international Voice AI Symposium coming up in May in Tampa and in St. Petersburg. It’s every year, it’s a fantastic international symposium that brings people from all over the world, startups, industry leaders, ethicists and lawyers and researchers that are working on vocal biomarkers. So if you are in the field, it’s really the place to be.

Daniel Smith: Awesome. I’ll be sure to include all of those in our show notes so that our listeners can check those out and learn more. On that note, my final question for you is, if you could leave our listeners with one key takeaway about voice as a biomarker, what would it be?

Yael Bensoussan: I would say start listening. I think that when people hear us talk about this, then they usually call me back about a week later and say, “Oh my god, I was listening to my mom, and she sounded really different. And then the next day she started getting sick.” So I think start listening, be aware. Especially to clinicians. We’re kind of in an era where we don’t really listen to patients anymore as we should, or we don’t examine. We kind of always rely too much on technology. AI listening is great, but use your human listening to listen to people to how they sound, because really we can understand a lot from people’s mood, from people’s health status. And I think that’s going to change how we can really implement this technology if we have people’s buy-in.

Daniel Smith: I think that’s a wonderful place to leave our conversation for today. So thank you again, Yael.

Yael Bensoussan: Thank you so much for having me again.

Daniel Smith: If you enjoyed today’s conversation, I encourage you to check out CITI Program’s other podcasts, courses, and webinars. As technology evolves, so does the need for professionals who understand the ethical responsibilities of its development and use. CITI Program offers ethics-focused, self-paced courses on AI and other emerging technologies, cybersecurity, data management, and more. These courses will help you enhance your skills, deepen your expertise, and lead with integrity. If you’re not currently affiliated with a subscribing organization, you can sign up as an independent learner. Check out the link in this episode’s description to learn more. And I just want to give a last special thanks to our line producer, Evelyn Fornell, and production and distribution support provided by Raymond Longaray and Megan Stuart. With that, I look forward to bringing you all more conversations on all things tech ethics.

How to Listen and Subscribe to the Podcast

You can find On Tech Ethics with CITI Program available from several of the most popular podcast services. Subscribe on your favorite platform to receive updates when episodes are newly released. You can also subscribe to this podcast, by pasting “https://feeds.buzzsprout.com/2120643.rss” into your your podcast apps.

Recent Episodes

- Season 1 – Episode 38: Active Physical Intelligence Explained

- Season 1 – Episode 37: Understanding the CMS Proposed Rule for Hospital Outpatient and Ambulatory Surgical Centers

- Season 1 – Episode 36: Vibe Research and the Future of Science

- Season 1 – Episode 35: Managing Healthcare Cybersecurity Risks and Incidents

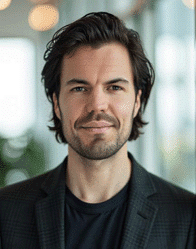

Meet the Guest

Yael Bensoussan, MD, MSc – University of South Florida

Dr. Yael Bensoussan is an Assistant Professor of Otolaryngology at the USF Health Morsani College of Medicine and a fellowship-trained laryngologist. She is also the PI of the Bridge2AI-Voice project, a multi-institutional endeavour funded by the NIH to fuel voice as a biomarker of diseases.

Meet the Host

Daniel Smith, Director of Content and Education and Host of On Tech Ethics Podcast – CITI Program

As Director of Content and Education at CITI Program, Daniel focuses on developing educational content in areas such as the responsible use of technologies, humane care and use of animals, and environmental health and safety. He received a BA in journalism and technical communication from Colorado State University.